🧑🚀 全世界最好的 llM 资料总结(数据处理、模型训练、模型部署、 O1 模型、 MCP 、小语言模型、视觉语言模型) | Resumen de los mejores recursos del mundo.

servidor remoto de MCP

0

Github Watches

0

Github Forks

0

Github Stars

Remote MCP Server on Cloudflare

Let's get a remote MCP server up-and-running on Cloudflare Workers complete with OAuth login!

Develop locally

# clone the repository

git clone git@github.com:cloudflare/ai.git

# install dependencies

cd ai

npm install

# run locally

npx nx dev remote-mcp-server

You should be able to open http://localhost:8787/ in your browser

Connect the MCP inspector to your server

To explore your new MCP api, you can use the MCP Inspector.

- Start it with

npx @modelcontextprotocol/inspector -

Within the inspector, switch the Transport Type to

SSEand enterhttp://localhost:8787/sseas the URL of the MCP server to connect to, and click "Connect" - You will navigate to a (mock) user/password login screen. Input any email and pass to login.

- You should be redirected back to the MCP Inspector and you can now list and call any defined tools!

Connect Claude Desktop to your local MCP server

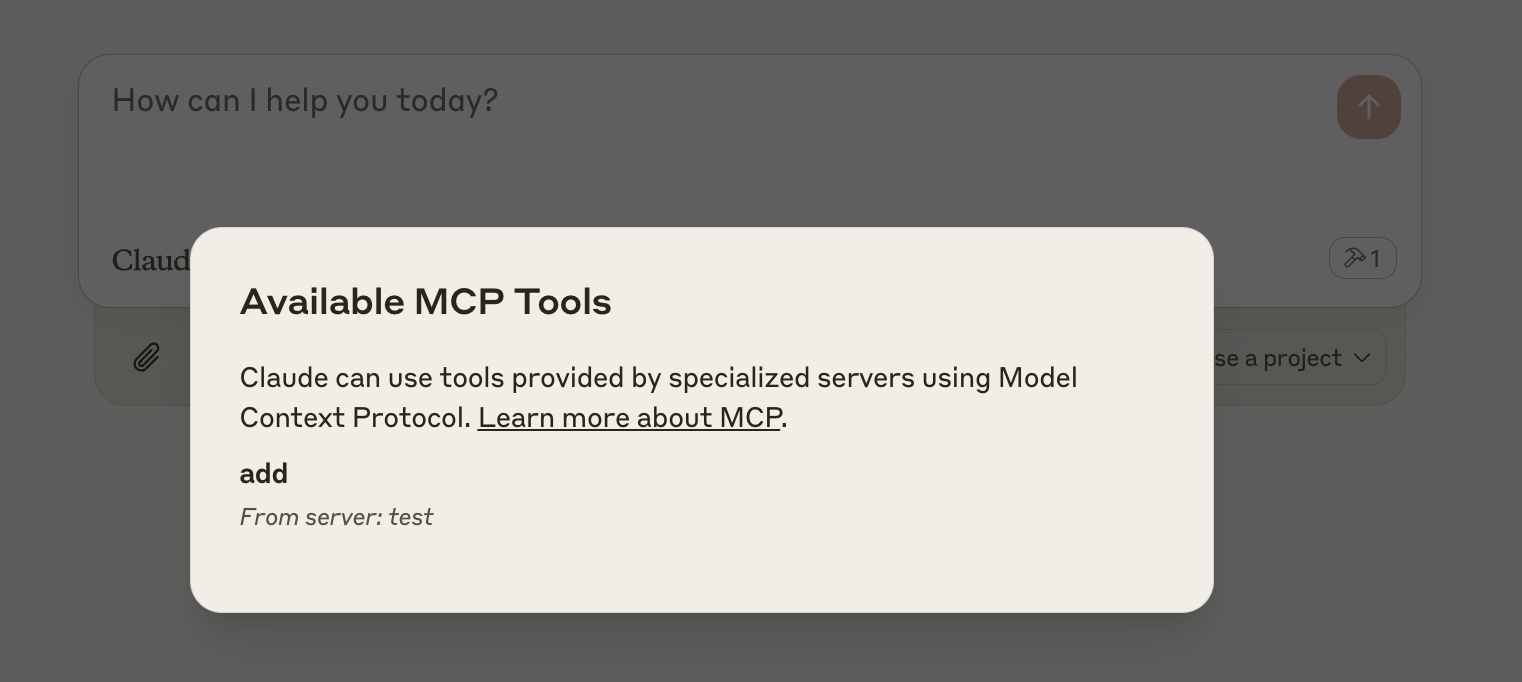

The MCP inspector is great, but we really want to connect this to Claude! Follow Anthropic's Quickstart and within Claude Desktop go to Settings > Developer > Edit Config to find your configuration file.

Open the file in your text editor and replace it with this configuration:

{

"mcpServers": {

"math": {

"command": "npx",

"args": [

"mcp-remote",

"http://localhost:8787/sse"

]

}

}

}

This will run a local proxy and let Claude talk to your MCP server over HTTP

When you open Claude a browser window should open and allow you to login. You should see the tools available in the bottom right. Given the right prompt Claude should ask to call the tool.

Deploy to Cloudflare

-

npx wrangler kv namespace create OAUTH_KV - Follow the guidance to add the kv namespace ID to

wrangler.jsonc -

npm run deploy

Call your newly deployed remote MCP server from a remote MCP client

Just like you did above in "Develop locally", run the MCP inspector:

npx @modelcontextprotocol/inspector@latest

Then enter the workers.dev URL (ex: worker-name.account-name.workers.dev/sse) of your Worker in the inspector as the URL of the MCP server to connect to, and click "Connect".

You've now connected to your MCP server from a remote MCP client.

Connect Claude Desktop to your remote MCP server

Update the Claude configuration file to point to your workers.dev URL (ex: worker-name.account-name.workers.dev/sse) and restart Claude

{

"mcpServers": {

"math": {

"command": "npx",

"args": [

"mcp-remote",

"https://worker-name.account-name.workers.dev/sse"

]

}

}

}

Debugging

Should anything go wrong it can be helpful to restart Claude, or to try connecting directly to your MCP server on the command line with the following command.

npx mcp-remote http://localhost:8787/sse

In some rare cases it may help to clear the files added to ~/.mcp-auth

rm -rf ~/.mcp-auth

相关推荐

AI's query engine - Platform for building AI that can answer questions over large scale federated data. - The only MCP Server you'll ever need

🔥 1Panel proporciona una interfaz web intuitiva y un servidor MCP para administrar sitios web, archivos, contenedores, bases de datos y LLM en un servidor de Linux.

Traducción de papel científico en PDF con formatos preservados - 基于 Ai 完整保留排版的 PDF 文档全文双语翻译 , 支持 支持 支持 支持 支持 支持 支持 支持 支持 支持 支持 支持 等服务 等服务 等服务 提供 提供 提供 提供 提供 提供 提供 提供 提供 提供 提供 提供 cli/mcp/docker/zotero

✨ 易上手的多平台 llm 聊天机器人及开发框架 ✨ 平台支持 qq 、 qq 频道、 telegram 、微信、企微、飞书 | MCP 服务器、 OpenAi 、 Deepseek 、 Géminis 、硅基流动、月之暗面、 Ollama 、 oneapi 、 DiFy 等。附带 Webui。

Reviews

user_SLy6rWwW

I recently started using the remote-mcp-server by awell-health, and it has significantly improved my workflow. Its seamless integration and user-friendly interface make it a standout product in the market. Highly recommended for professionals looking for a reliable solution!

user_mU1TOcDO

As a dedicated user of remote-mcp-server by awell-health, I am thoroughly impressed with the seamless integration and robust performance it offers. It has significantly streamlined my workflow, enabling efficient remote management with ease. The user-friendly interface and comprehensive support make it a standout choice for any MCP application requirements. Highly recommend!