trousse à moteur

🤖 Le moteur sémantique pour les clients du protocole de contexte modèle (MCP) et les agents de l'IA 🔥

14

Github Watches

69

Github Forks

282

Github Stars

Wren Engine is the Semantic Engine for MCP Clients and AI Agents. Wren AI GenBI AI Agent is based on Wren Engine.

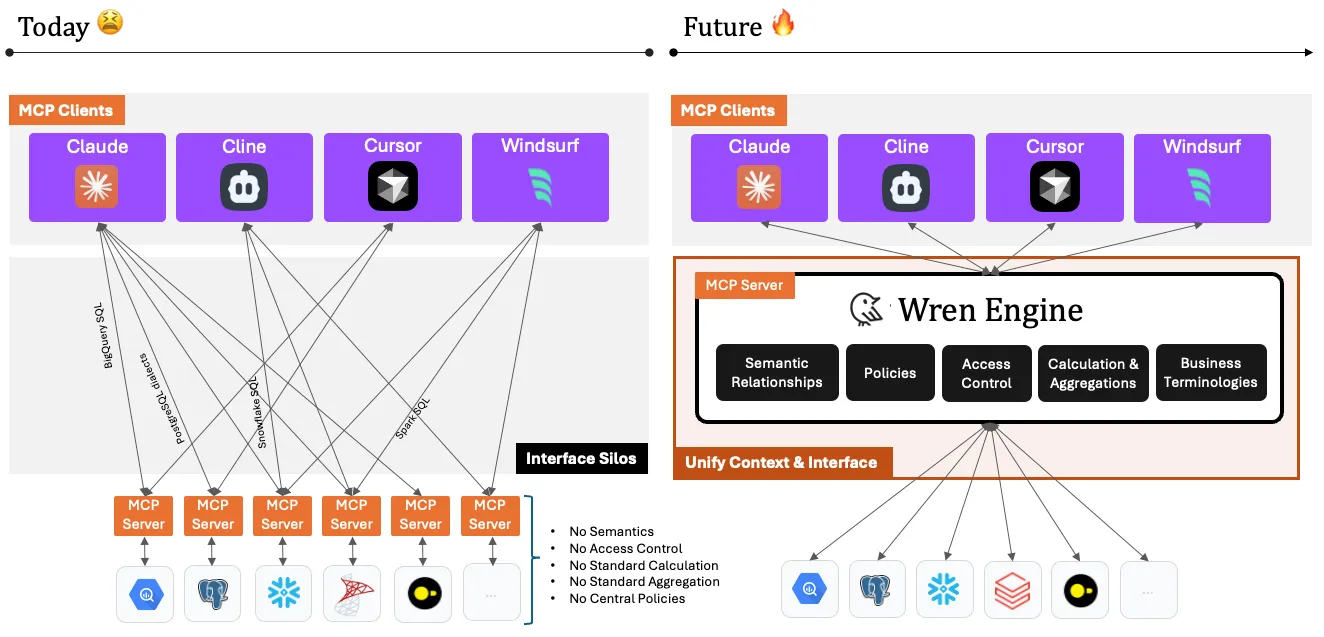

😫 Challenge Today

At the enterprise level, the stakes - and the complexity - are much higher. Businesses run on structured data stored in cloud warehouses, relational databases, and secure filesystems. From BI dashboards to CRM updates and compliance workflows, AI must not only execute commands but also understand and retrieve the right data, with precision and in context.

While many community and official MCP servers already support connections to major databases like PostgreSQL, MySQL, SQL Server, and more, there's a problem: raw access to data isn't enough.

Enterprises need:

- Accurate semantic understanding of their data models

- Trusted calculations and aggregations in reporting

- Clarity on business terms, like "active customer," "net revenue," or "churn rate"

- User-based permissions and access control

Natural language alone isn't enough to drive complex workflows across enterprise data systems. You need a layer that interprets intent, maps it to the correct data, applies calculations accurately, and ensures security.

🎯 Our Mission

Wren Engine is on a mission to power the future of MCP clients and AI agents through the Model Context Protocol (MCP) — a new open standard that connects LLMs with tools, databases, and enterprise systems.

As part of the MCP ecosystem, Wren Engine provides a semantic engine powered the next generation semantic layer that enables AI agents to access business data with accuracy, context, and governance.

By building the semantic layer directly into MCP clients, such as Claude, Cline, Cursor, etc. Wren Engine empowers AI Agents with precise business context and ensures accurate data interactions across diverse enterprise environments.

We believe the future of enterprise AI lies in context-aware, composable systems. That’s why Wren Engine is designed to be:

- 🔌 Embeddable into any MCP client or AI agentic workflow

- 🔄 Interoperable with modern data stacks (PostgreSQL, MySQL, Snowflake, etc.)

- 🧠 Semantic-first, enabling AI to “understand” your data model and business logic

- 🔐 Governance-ready, respecting roles, access controls, and definitions

With Wren Engine, you can scale AI adoption across teams — not just with better automation, but with better understanding.

Check our full article

🚀 Get Started with MCP

https://github.com/user-attachments/assets/dab9b50f-70d7-4eb3-8fc8-2ab55dc7d2ec

🤔 Concepts

- Powering Semantic SQL for AI Agents with Apache DataFusion

- Quick start with Wren Engine

- What is semantics?

- What is Modeling Definition Language (MDL)?

- Benefits of Wren Engine with LLMs

🚧 Project Status

Wren Engine is currently in the beta version. The project team is actively working on progress and aiming to release new versions at least biweekly.

🛠️ Developer Guides

The project consists of 4 main modules:

- ibis-server: the Web server of Wren Engine powered by FastAPI and Ibis

- wren-core: the semantic core written in Rust powered by Apache DataFusion

- wren-core-py: the Python binding for wren-core

- mcp-server: the MCP server of Wren Engine powered by MCP Python SDK

⭐️ Community

- Welcome to our Discord server to give us feedback!

- If there is any issues, please visit Github Issues.

相关推荐

I find academic articles and books for research and literature reviews.

Confidential guide on numerology and astrology, based of GG33 Public information

Advanced software engineer GPT that excels through nailing the basics.

Emulating Dr. Jordan B. Peterson's style in providing life advice and insights.

Your go-to expert in the Rust ecosystem, specializing in precise code interpretation, up-to-date crate version checking, and in-depth source code analysis. I offer accurate, context-aware insights for all your Rust programming questions.

Take an adjectivised noun, and create images making it progressively more adjective!

Découvrez la collection la plus complète et la plus à jour de serveurs MCP sur le marché. Ce référentiel sert de centre centralisé, offrant un vaste catalogue de serveurs MCP open-source et propriétaires, avec des fonctionnalités, des liens de documentation et des contributeurs.

L'application tout-en-un desktop et Docker AI avec chiffon intégré, agents AI, constructeur d'agent sans code, compatibilité MCP, etc.

Plateforme d'automatisation de workflow à code équitable avec des capacités d'IA natives. Combinez le bâtiment visuel avec du code personnalisé, de l'auto-hôte ou du cloud, 400+ intégrations.

🧑🚀 全世界最好的 LLM 资料总结 (数据处理、模型训练、模型部署、 O1 模型、 MCP 、小语言模型、视觉语言模型) | Résumé des meilleures ressources LLM du monde.

Manipulation basée sur Micropython I2C de l'exposition GPIO de la série MCP, dérivée d'Adafruit_MCP230XX

Une liste organisée des serveurs de protocole de contexte de modèle (MCP)

Ce référentiel est pour le développement du serveur MCP Azure, apportant la puissance d'Azure à vos agents.

Serveurs MCP géniaux - une liste organisée de serveurs de protocole de contexte de modèle

Reviews

user_fUhdoQZC

I have been using the Wren-engine developed by Canner, and it has significantly streamlined my workflow. Its robust features and seamless integration with other tools make it a standout. Check it out on GitHub: https://github.com/Canner/wren-engine. Highly recommend it for developers looking for efficiency and reliability.