MCP-LlM-Sandbox

Komplette Sandbox zum Augmenting LLM-Inferenz (lokal oder Cloud) mit MCP-Client-Server. Niedriger Reibungstest für MCP Server -Validierung und agentenbewertung.

1

Github Watches

0

Github Forks

1

Github Stars

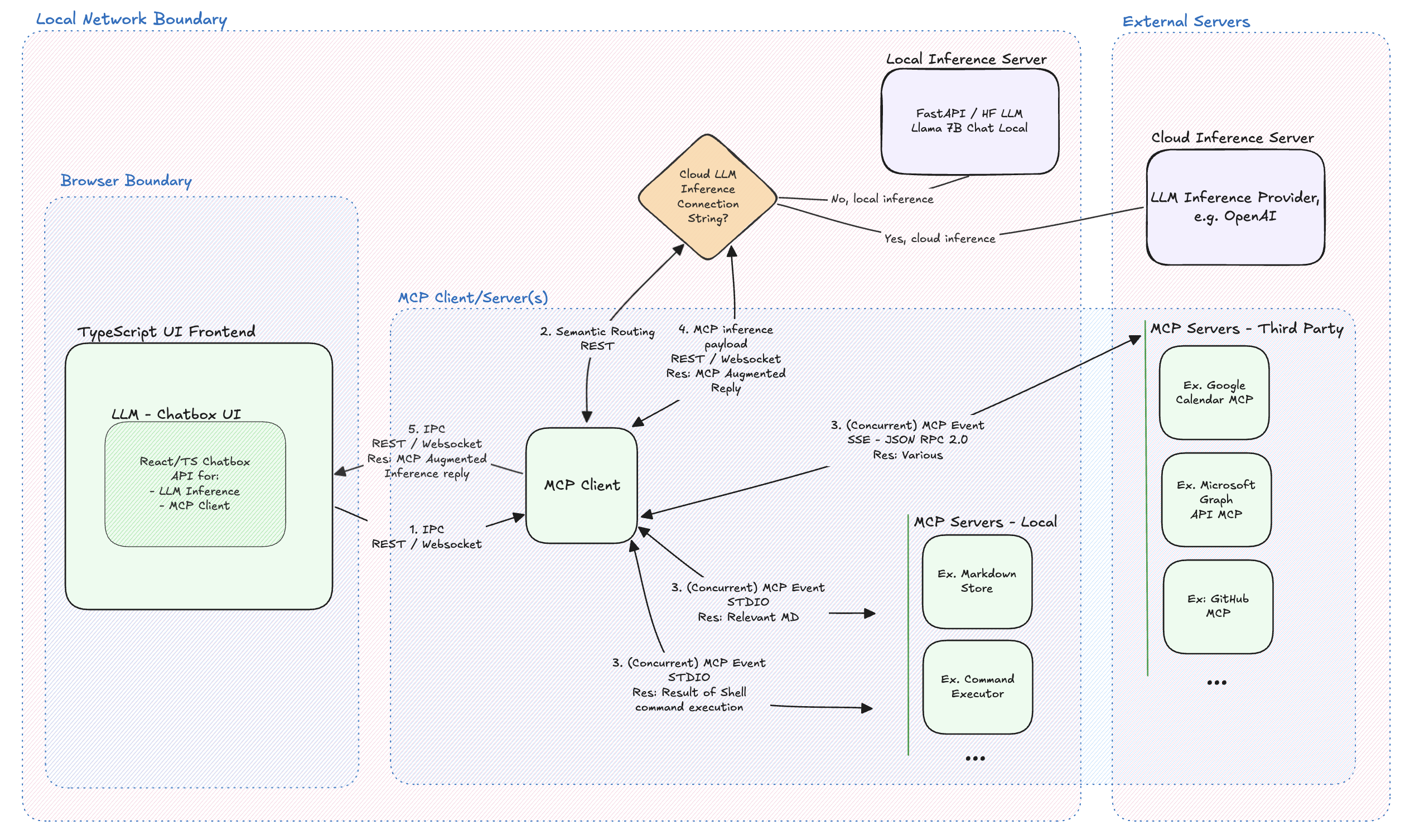

MCP Client-Server Sandbox for LLM Augmentation

Overview

Under Development

mcp-scaffold is a minimal sandbox for validating Model Context Protocol (MCP) servers against a working LLM client and live chat interface. The aim is minimal friction when plugging in new MCP Servers and evaluating LLM behavior.

At first a local LLM, such as LLaMA 7B is used for local network only testing capabilties. Next, cloud inference will be supported, so devs can use more powerful models for validation without complete local network sandboxing. LLaMA 7B is large (~13GB in common HF format), however, smaller models lack the conversational ability essential for validating MCP augmentation. That said, LLaMA 7b is a popular local LLM Inference model with over 1.3m downloads last month (Mar 2025).

With chatbox UI, LLM inference options in place, MCP Client and a couple demo MCP servers will be added. This project serves as both a reference architecture and a practical development environment, evolving alongside the MCP specification.

Architecture

相关推荐

I find academic articles and books for research and literature reviews.

Confidential guide on numerology and astrology, based of GG33 Public information

Advanced software engineer GPT that excels through nailing the basics.

Emulating Dr. Jordan B. Peterson's style in providing life advice and insights.

Converts Figma frames into front-end code for various mobile frameworks.

Your go-to expert in the Rust ecosystem, specializing in precise code interpretation, up-to-date crate version checking, and in-depth source code analysis. I offer accurate, context-aware insights for all your Rust programming questions.

Take an adjectivised noun, and create images making it progressively more adjective!

Entdecken Sie die umfassendste und aktuellste Sammlung von MCP-Servern auf dem Markt. Dieses Repository dient als zentraler Hub und bietet einen umfangreichen Katalog von Open-Source- und Proprietary MCP-Servern mit Funktionen, Dokumentationslinks und Mitwirkenden.

Die All-in-One-Desktop & Docker-AI-Anwendung mit integriertem Lappen, AI-Agenten, No-Code-Agent Builder, MCP-Kompatibilität und vielem mehr.

Fair-Code-Workflow-Automatisierungsplattform mit nativen KI-Funktionen. Kombinieren Sie visuelles Gebäude mit benutzerdefiniertem Code, SelbstHost oder Cloud, 400+ Integrationen.

🧑🚀 全世界最好的 llm 资料总结(数据处理、模型训练、模型部署、 O1 模型、 MCP 、小语言模型、视觉语言模型) | Zusammenfassung der weltbesten LLM -Ressourcen.

Dieses Repository dient zur Entwicklung des Azure MCP -Servers, wodurch Ihre Agenten die Leistung von Azure verleiht.

Reviews

user_IGwZqoPm

As a dedicated user of mcp-llm-sandbox, I am genuinely impressed by its capabilities. The ease of setting up and seamless integration provided by tmcarmichael makes it a standout tool. The repository found at https://github.com/tmcarmichael/mcp-scaffold is well-documented, making onboarding straightforward even for newcomers. Highly recommend it for anyone looking to leverage powerful language models efficiently!