Tester-MCP-Client

Modellkontextprotokoll (MCP) -Client für die Akteure von APIFY

2

Github Watches

6

Github Forks

45

Github Stars

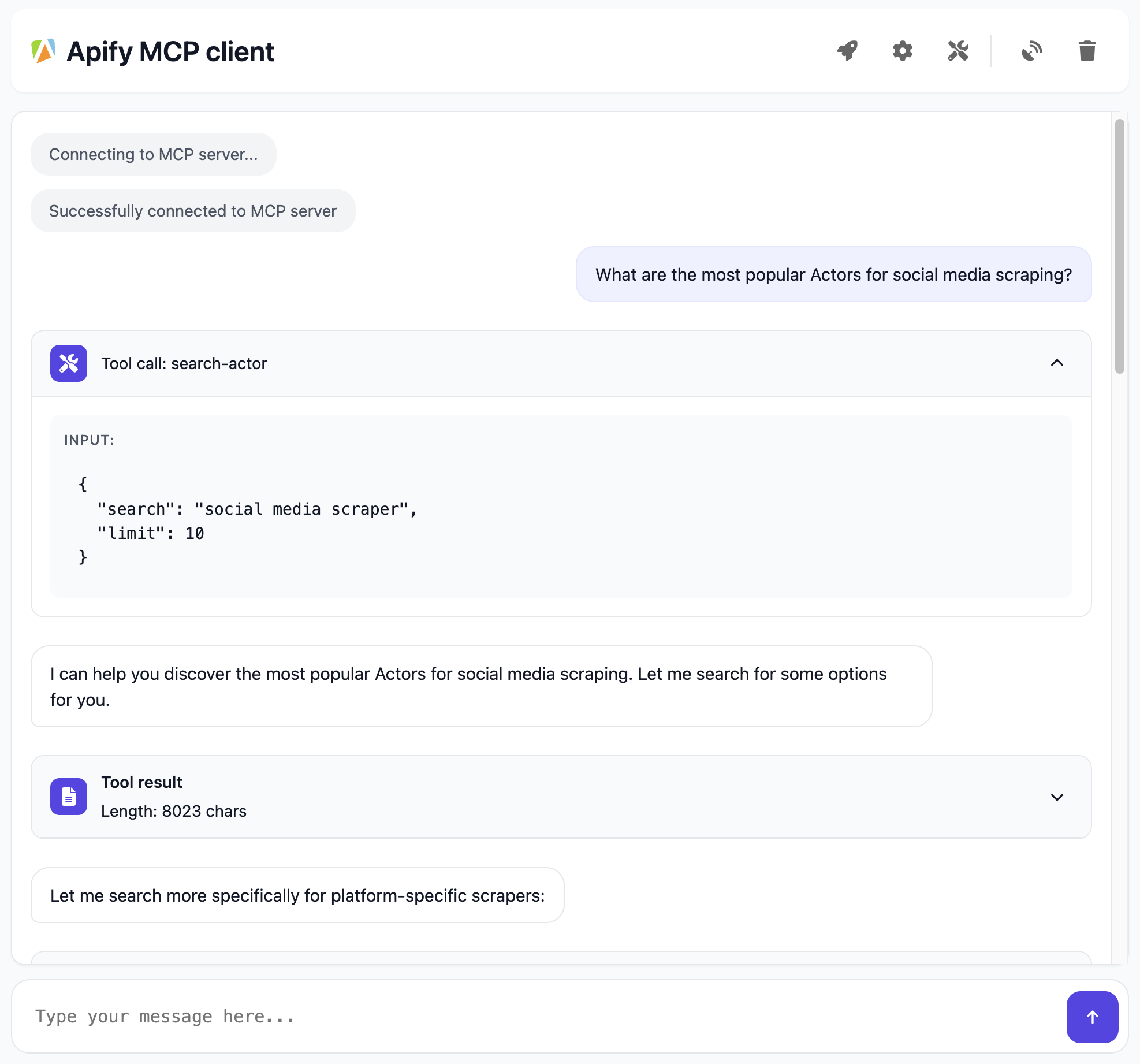

Tester Client for Model Context Protocol (MCP)

Implementation of a model context protocol (MCP) client that connects to an MCP server using Server-Sent Events (SSE) and displays the conversation in a chat-like UI. It is a standalone Actor server designed for testing MCP servers over SSE. It uses Pay-per-event pricing model.

For more information, see the Model Context Protocol website or blogpost What is MCP and why does it matter?.

Once you run the Actor, check the output or logs for a link to the chat UI interface to interact with the MCP server. The URL will look like this and will vary each run:

Navigate to https://...apify.net to interact with chat-ui interface.

🚀 Main features

- 🔌 Connects to an MCP server using Server-Sent Events (SSE)

- 💬 Provides a chat-like UI for displaying tool calls and results

- 🇦 Connects to an Apify MCP Server for interacting with one or more Apify Actors

- 💥 Dynamically uses tools based on context and user queries (if supported by a server)

- 🔓 Use Authorization headers and API keys for secure connections

- 🪟 Open source, so you can review it, suggest improvements, or modify it

🎯 What does Tester MCP Client do?

When connected to Actors-MCP-Server the Tester MCP Client provides an interactive chat interface where you can:

- "What are the most popular Actors for social media scraping?"

- "Show me the best way to use the Instagram Scraper"

- "Which Actor should I use to extract data from LinkedIn?"

- "Can you help me understand how to scrape Google search results?"

📖 How does it work?

The Apify MCP Client connects to a running MCP server over Server-Sent Events (SSE) and it does the following:

- Initiates an SSE connection to the MCP server

/sse. - Sends user queries to the MCP server via

POST /message. - Receives real-time streamed responses (via

GET /sse) that may include LLM output, and tool usage blocks - Based on the LLM response, orchestrates tool calls and displays the conversation

- Displays the conversation

⚙️ Usage

- Test any MCP server over SSE

- Test Apify Actors MCP Server and ability to dynamically select amongst 3000+ tools

Normal Mode (on Apify)

You can run the Tester MCP Client on Apify and connect it to any MCP server that supports SSE. Configuration can be done via the Apify UI or API by specifying parameters such as the MCP server URL, system prompt, and API key.

Once you run Actor, check the logs for a link to the Tester MCP Client UI, where you can interact with the MCP server: The URL will look like this and will be different from run to run:

INFO Navigate to https://......runs.apify.net in your browser to interact with an MCP server.

Standby Mode (on Apify)

In progress 🚧

💰 Pricing

The Apify MCP Client is free to use. You only pay for LLM provider usage and resources consumed on the Apify platform.

This Actor uses a modern and flexible approach for AI Agents monetization and pricing called Pay-per-event.

Events charged:

- Actor start (based on memory used, charged per 128 MB unit)

- Running time (charged every 5 minutes, per 128 MB unit)

- Query answered (depends on the model used, not charged if you provide your own API key for LLM provider)

When you use your own LLM provider API key, running the MCP Client for 1 hour with 128 MB memory costs approximately $0.06. With the Apify Free tier (no credit card required 💳), you can run the MCP Client for 80 hours per month. Definitely enough to test your MCP server!

📖 How it works

Browser ← (SSE) → Tester MCP Client ← (SSE) → MCP Server

We create this chain to keep any custom bridging logic inside the Tester MCP Client, while leaving the main MCP Server unchanged. The browser uses SSE to communicate with the Tester MCP Client, and the Tester MCP Client relies on SSE to talk to the MCP Server. This separates extra client-side logic from the core server, making it easier to maintain and debug.

- Navigate to

https://tester-mcp-client.apify.actor?token=YOUR-API-TOKEN(or http://localhost:3000 if you are running it locally). - Files

index.htmlandclient.jsare served from thepublic/directory. - Browser opens SSE stream via

GET /sse. - The user's query is sent with

POST /message. - Query processing:

- Calls Large Language Model.

- Optionally calls tools if required using

- For each result chunk,

sseEmit(role, content)

Local development

The Tester MCP Client Actor is open source and available on GitHub, allowing you to modify and develop it as needed.

Download the source code:

git clone https://github.com/apify/tester-mcp-client.git

cd tester-mcp-client

Install the dependencies:

npm install

Create a .env file with the following content (refer to the .env.example file for guidance):

APIFY_TOKEN=YOUR_APIFY_TOKEN

LLM_PROVIDER_API_KEY=YOUR_API_KEY

Default values for settings such as mcpSseUrl, systemPrompt, and others are defined in the const.ts file. You can adjust these as needed for your development.

Run the client locally

npm start

Navigate to http://localhost:3000 in your browser to interact with the MCP server.

Happy chatting with Apify Actors!

ⓘ Limitations and feedback

The client does not support all MCP features, such as Prompts and Resource. Also, it does not store the conversation, so refreshing the page will clear the chat history.

References

相关推荐

I find academic articles and books for research and literature reviews.

Confidential guide on numerology and astrology, based of GG33 Public information

Advanced software engineer GPT that excels through nailing the basics.

Emulating Dr. Jordan B. Peterson's style in providing life advice and insights.

Converts Figma frames into front-end code for various mobile frameworks.

Your go-to expert in the Rust ecosystem, specializing in precise code interpretation, up-to-date crate version checking, and in-depth source code analysis. I offer accurate, context-aware insights for all your Rust programming questions.

Take an adjectivised noun, and create images making it progressively more adjective!

Entdecken Sie die umfassendste und aktuellste Sammlung von MCP-Servern auf dem Markt. Dieses Repository dient als zentraler Hub und bietet einen umfangreichen Katalog von Open-Source- und Proprietary MCP-Servern mit Funktionen, Dokumentationslinks und Mitwirkenden.

Die All-in-One-Desktop & Docker-AI-Anwendung mit integriertem Lappen, AI-Agenten, No-Code-Agent Builder, MCP-Kompatibilität und vielem mehr.

Fair-Code-Workflow-Automatisierungsplattform mit nativen KI-Funktionen. Kombinieren Sie visuelles Gebäude mit benutzerdefiniertem Code, SelbstHost oder Cloud, 400+ Integrationen.

🧑🚀 全世界最好的 llm 资料总结(数据处理、模型训练、模型部署、 O1 模型、 MCP 、小语言模型、视觉语言模型) | Zusammenfassung der weltbesten LLM -Ressourcen.

Dieses Repository dient zur Entwicklung des Azure MCP -Servers, wodurch Ihre Agenten die Leistung von Azure verleiht.

Reviews

user_Y1aDF869

As a devoted user of the tester-mcp-client by apify, I've found it incredibly reliable and efficient for managing my multi-cloud projects. The seamless integration and user-friendly interface have significantly streamlined my workflow. Highly recommend to anyone in need of a robust multi-cloud management tool. Check it out on GitHub for more details!